Introduction

A common pattern to design web applications is to have two distinct services, one provides a UI and the other provides an API for the UI to consume. In production settings, we put these services behind a proxy or gateway so that they sit in the same origin. However, in development it’s common for you to develop each piece in its own origin and configure CORS (cross origin resource sharing) to facilitate development.

But, I say no more, no longer should we use CORS for local development, in this, a dockerized world. In this post I will show you how to set up a local proxy using nginx to develop an application using react and FastAPI.

Code

Basic setup

Let’s start by building a basic FastAPI API,

# src/example/api.py

from typing import TypedDict

from fastapi import FastAPI

app = FastAPI(

root_path="/api/v1",

title="Example API",

version="0.1.0",

)

class Quote(TypedDict):

"""A quote."""

quote: str

@app.get("/quotes/random")

async def random_quote() -> Quote:

"""Get a random quote."""

return {"quote": "The best is yet to come."}A crucial part here is that we want to serve all the API endpoints under an

/api namespace, for good measure I added /api/v1, just in case we want to

introduce a new version.

Our UI is going to look like this to begin with:

// src/App.tsx

import "./App.css";

function App() {

return <p>Example app</p>;

}

export default App;And we are going to run both using a compose.yaml file that looks like:

# compose.yaml

services:

ui:

build: ./ui

ports:

- "5173:5173"

command: ["npm", "run", "dev", "--", "--host", "0.0.0.0"]

api:

build: ./api

ports:

- "8000:8000"

command:

["uv", "run", "fastapi", "dev", "--host", "0.0.0.0", "src/example/api.py"]The file structure of our project looks like:

nginx-proxy-example on main

❯ tree

.

├── README.md

├── api

│ ├── Dockerfile

│ ├── README.md

│ ├── pyproject.toml

│ ├── src

│ │ └── example

│ │ ├── __init__.py

│ │ └── api.py

│ └── uv.lock

├── compose.yaml

└── ui

├── Dockerfile

├── README.md

├── eslint.config.js

├── index.html

├── package-lock.json

├── package.json

├── public

│ └── vite.svg

├── src

│ ├── App.css

│ ├── App.tsx

│ ├── index.css

│ ├── main.tsx

│ └── vite-env.d.ts

├── tsconfig.app.json

├── tsconfig.json

├── tsconfig.node.json

└── vite.config.tsYou can explore the whole project in its initial state on GitHub.

In this state we get basic development functionality. We can access the different routes:

http://localhost:8000/api/v1/redocfor API docs.http://localhost:8000/api/v1/quotes/randomfor a random quote.http://localhost:5173to visit the UI.

However, we don’t have any sort of live-reload or HMR (hot module reloading).

Live reloading with docker watch

We used to solve live reloading with volume binds in docker compose. However, volume binds lead to performance and cross platform development issues, that’s why we’re going to use file watch:

# compose.yaml

services:

ui:

build: ./ui

ports:

- "5173:5173"

command: ["npm", "run", "dev", "--", "--host", "0.0.0.0"]

develop:

watch:

- action: sync

path: ./ui/src

target: /app/src

- action: rebuild

path: ./ui/package.json

# [...]This will watch for changes in any files under ./ui/src and sync them over to

the running container under /app/src from there vite takes over and

performs the HMR.

The action rebuild will actually look at package.json and rebuild the image

if need be, actually updating the node_modules/ folder in the image and

restarts the container using the new image.

We can perform a similar trick for the api service, watching the ./api/src

folder and the ./api/pyproject.toml file. You can explore the updated

file on GitHub.

Talking to the API

Let’s start simply by fetching the quote from our server using @tanstack/react-query, for data fetching and zod for data validation.

import z from "zod";

import "./App.css";

import { useQuery } from "@tanstack/react-query";

const quoteSchema = z.object({

quote: z.string(),

});

type Quote = z.infer<typeof quoteSchema>;

const fetchRandomQuote = async (): Promise<Quote> => {

const response = await fetch("http://localhost:8000/api/v1/quotes/random");

const data = await response.json();

return quoteSchema.parse(data);

};

function App() {

const { data, isError, isLoading, error } = useQuery({

queryKey: ["quotes", "random"],

queryFn: fetchRandomQuote,

});

if (isLoading) {

return <p>Loading your quote...</p>;

}

if (isError || !data) {

console.error(error);

return <p>Error fetching quote</p>;

}

return <p>{data.quote}</p>;

}

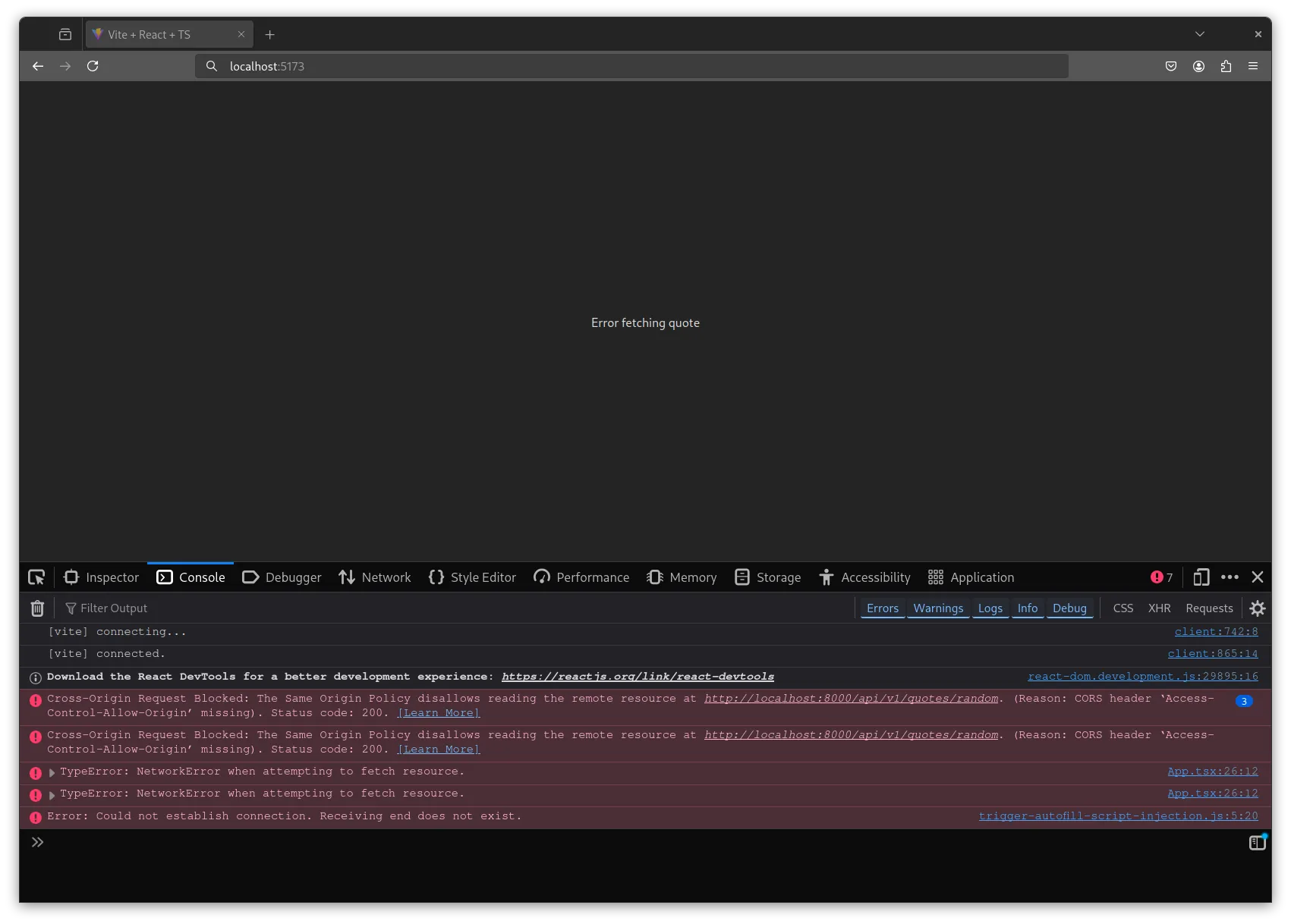

export default App;This solution looks more complicated than it should be, but such is the world we

live in. Furthermore, it has some shortcomings, we would need to update the

host part of our URL (localhost:8000) depending of the environment. And, it

requires CORS to be setup in our API server:

Enabling CORS is a security liability, that might be justifiable in development, but things could go horribly wrong if misconfigured in production.

Using a proxy

Let’s address both issues using nginx as a proxy. We need to configure it so that:

- Any requests coming to

/apiare forwarded to theapiservice on port8000. - Any other request goes to the

uiservice on port5173.

To achieve this we use the following nginx.conf file:

# nginx.conf

worker_processes 1;

events {

worker_connections 1024;

}

http {

upstream ui {

server ui:5173;

}

upstream api {

server api:8000;

}

server {

listen 8080;

listen [::]:8080;

location ~* \.(eot|otf|ttf|woff|woff2)$ {

add_header Access-Control-Allow-Origin *;

}

location /api {

proxy_pass http://api;

proxy_http_version 1.1;

proxy_set_header Host $host;

break;

}

location / {

proxy_pass http://ui;

proxy_http_version 1.1;

proxy_set_header Host $host;

break;

}

}

}Let’s focus on the http block, here we define both a ui and an api

upstreams, specifying the desired ports. In the server block we listen to port

8080, this could be any port of your choosing. Then we enable CORS for fonts

(for some reason). And, finally, we get to the meat, we forward anything

incoming under /api to the api upstream, and anything else under / goes to

the ui upstream.

Then we update our compose.yaml to include a new service,

# compose.yaml

services:

proxy:

build: ./proxy

ports:

- "8080:8080"

command: ["nginx", "-g", "daemon off;"]

develop:

watch:

- action: rebuild

path: ./proxy/nginx.conf

ui:

build: ./ui

command: ["npm", "run", "dev", "--", "--host", "0.0.0.0"]

develop:

# ...

api:

build: ./api

command:

["uv", "run", "fastapi", "dev", "--host", "0.0.0.0", "src/example/api.py"]

develop:

# ...As you can see, we no longer need to expose ports from the UI or API services, all communications happen through the proxy service.

Now we can update our fetch call to,

// ...

const response = await fetch("/api/v1/quotes/random");

// ...This works, however, we lose the web socket connection that allows HMR, in order to restore it, we should set the Upgrade and Connection headers using the proxy:

# nginx.conf

# ...

http {

map $http_upgrade $connection_upgrade {

default upgrade;

'' close;

}

# ...

server {

# ...

location / {

proxy_pass http://ui;

proxy_http_version 1.1;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection $connection_upgrade;

proxy_set_header Host $host;

break;

}

# ...

}

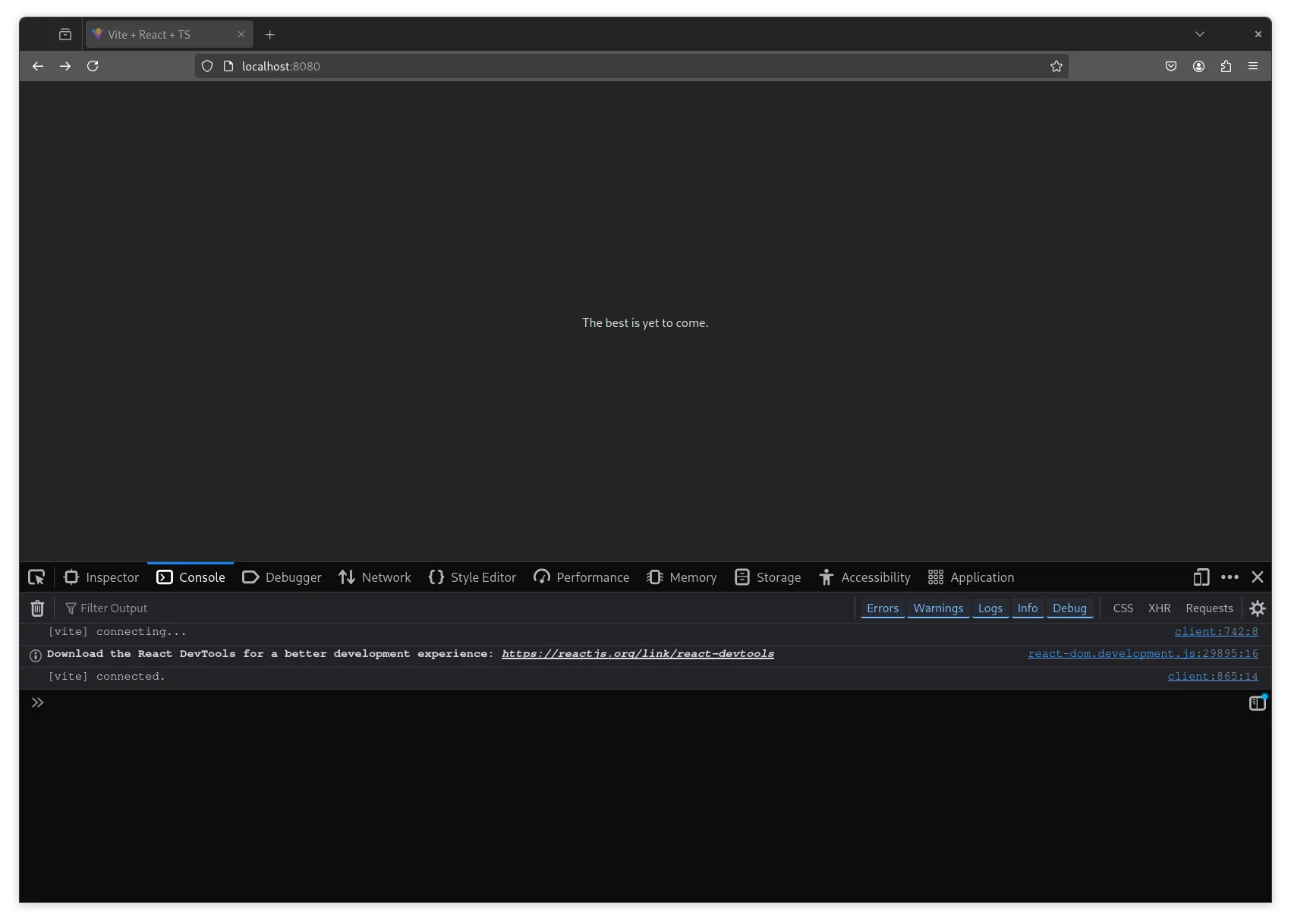

}You can now access the app using https://localhost:8080:

In this iteration you can visit:

http://localhost:8080/api/v1/redocfor API docs.http://localhost:8080/api/v1/quotes/randomfor a random quote.http://localhost:8080to visit the UI.

All from the same origin. You can browse the finished code on GitHub.

Learnings

- CORS may be easier to understand for beginners, but they are a big of a security concern if they get to production environments. Thus, it might be safer to teach beginners how to use a proxy instead of relying in CORS.

- A proxy may be easier to configure than CORS, since you don’t need to make your UI application talk to different routes depending on the environment.

- Unrelated but file watch looks great.